Data extraction in mobile apps with Xamarin

A lot of the mobile apps we create provide an optimized interface on top of raw data retrieved from a distant service.

When that service is constructed with frontends like mobile apps in mind, it’s generally mindful of giving you a nice API so that your implementation can take into account the limitations of the device you are running on (intermittent/slow Internet access, limited screen space, unwillingness of the user to type).

However, it may also be the case that your application is trying to exploit data upon which you have no control: no choice on the format the data is exposed and no friendly medium existing to access them.

This is usually when we enter the field of Web scraping which tries to extract data from human-readable content (like websites) and turn them in machine-readable structures.

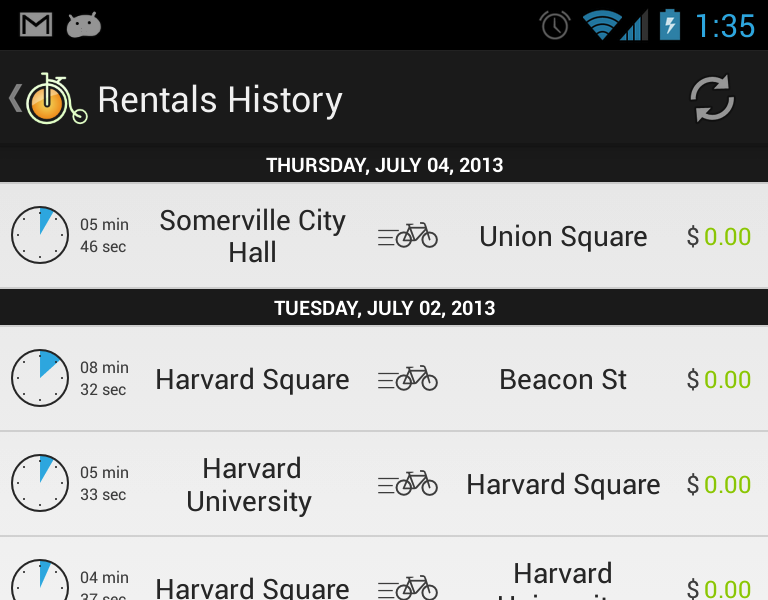

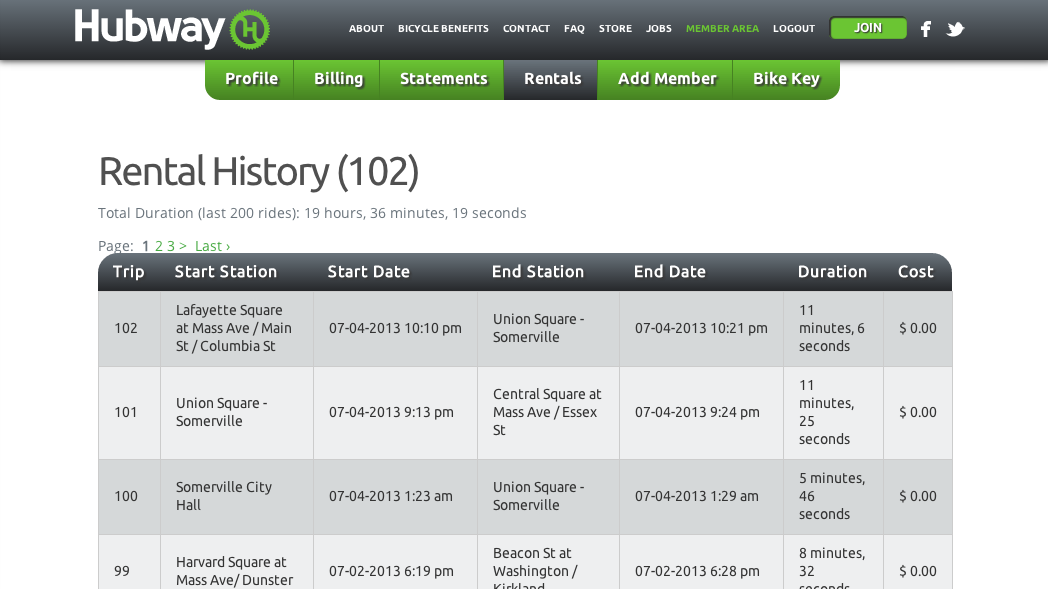

Recently in Moyeu, I implemented support for user to visualize their Hubway rental history. For those who don’t know Hubway, it’s the bike sharing system that is in place in the Boston and Cambridge areas.

The company that operates Hubway only provides a website to get this information with its own custom authentication scheme. Below is a screenshot of how it looks like:

To obtain this data in a usable form for my mobile app there were two challenges: emulating Hubway’s authentification workflow and extracting the individual cell content from the raw HTML.

Authentication

The workflow to access your rental history is roughly as follow:

- Try to access https://thehubway.com/rentals

- If your cookies contain a valid authentication token, show rentals

- If not, issue a 302 redirect to https://thehubway/login

- User types its credentials and validate

- If login is successful, another 302 is generated to redirect user to their member page

- Go back to 1

The fact that some of these steps involves network calls and that there are multiple points of failures doesn’t make this an easy task.

Previously in .NET lands, you would probably have devised a solution using WebClient and maybe some Task continuations that would have quickly turned into spaghetti code.

Thankfully with the async/await support that was added to the Xamarin family and the help of the new HttpClient swiss-army knife class in .NET 4.5, this workflow can be very straightforwardly translated into an equivalent imperative algorithm:

public async Task<Rental[]> GetRentals ()

{

bool needsAuth = false;

client = new HttpClient (new HttpClientHandler {

AllowAutoRedirect = false, // This allows us to handle Hubway's 302

CookieContainer = cookies, // Use a durable store for authentication cookies

UseCookies = true

});

// Instead of using a infinite loop, we want to exit early since the problems

// might be due to a broken website or terrible network conditions

for (int i = 0; i < 4; i++) {

try {

if (needsAuth) {

var content = new FormUrlEncodedContent (new Dictionary<string, string> {

{ "username", credentials.Username },

{ "password", credentials.Password }

});

var login = await client.PostAsync (HubwayLoginUrl, content).ConfigureAwait (false);

if (login.StatusCode == HttpStatusCode.Found)

needsAuth = false;

else

continue;

}

var answer = await client.GetStringAsync (rentalsUrl).ConfigureAwait (false);

return ProcessHtml (answer);

} catch (HttpRequestException htmlException) {

/* Unfortunately, HttpRequestException doesn't allow us

* to access the original http status code */

if (!needsAuth)

needsAuth = htmlException.Message.Contains ("302");

continue;

} catch (Exception e) {

Log.Error ("RentalsGenericError", e.ToString ());

break;

}

}

return null;

}This method takes an optimistic approach by reusing a cookie container that is stored durably (using XML serialization) and in case of a 302 (which indicates user needs to re-authenticate), we handle gracefully the “error” condition by restarting the loop and sending the user credentials we previously acquired to refresh our cookie-based tokens.

HTML extraction

This part is actually very simple thanks to a library that anyone who has had to deal with HTML knows: HtmlAgilityPack.

For people who don’t know it, HtmlAgilityPack allows you to parse HTML document and, contrary to most traditional XML parser, it’s able to recover from badly written content (much like your web browser).

The library being mostly pure cross-platform C#, it’s very easy to drop in your Xamarin mobile projects. You can reuse my cleaned up version which is, in that case, exposed as a Xamarin.Android library project.

When PCL support becomes mainstream, it should be fairly straightforwards to turn this into a portable equivalent.

For those who used HtmlAgilityPack a long time ago, it used to be a tedious business to walk the parsed HTML tree. This is not true anymore since they had the delightful idea to create a new API similar in spirit to System.Xml.Linq to access the resulting DOM.

In our rentals case, Hubway’s HTML has that kind of shape:

html

+- body

+- div [id="content"]

+- table

+- tbody

+- tr

+- td <-- the fields we are interested in

Which, thanks to HtmlAgilityPack, we can extract in the following simple way:

// HTML data we got earlier

string answer = ...;

var doc = new HtmlDocument ();

doc.LoadHtml (answer);

var div = doc.GetElementbyId ("content");

var table = div.Element ("table");

return table.Element ("tbody").Elements ("tr").Select (row => {

var items = row.Elements ("td").ToArray ();

return new Rental {

Id = long.Parse (items[0].InnerText.Trim ()),

FromStationName = items [1].InnerText.Trim (),

ToStationName = items [3].InnerText.Trim (),

Duration = ParseRentalDuration (items [5].InnerText.Trim ()),

Price = ParseRentalPrice (items [6].InnerText.Trim ()),

DepartureTime = DateTime.Parse(items[2].InnerText, CultureInfo.InvariantCulture),

ArrivalTime = DateTime.Parse(items[4].InnerText, CultureInfo.InvariantCulture)

};

}).ToArray ();Conclusion

With our devices getting more and more powerful, we are slowly liberated from the constraints of being able to only handle pre-massaged data and instead do more complex content processing.

One thing to keep in mind is that, as with general scraping, the main drawback of getting your data like that is that you are at the mercy of the website keeping a relative compatibility of its HTML structure for your app to continue to function properly.

This means that, for no apparent reasons to your users, if the website changes your app might suddenly stop working or behave strangely. In that case, you will have to react promptly to correct the problem which is not always possible with some appstore and their review process.

On the other hand, for those who want to add a companion mobile app to an existing web-based application without having to re-architecture their entire data layer into a friendly API, they can use this technique to quickly build something.

Facebook, for instance, are known to use the raw HTML of their mobile website http://m.facebook.com to provide the content of their native iOS app.